I recovered quite all the FDS subsystem after the switching on of the hardware that was carried out by Fiodor.

1) Check if all the hardware was online, epsecially all the things connected via ethernet. This was done with the ping command and I checked: sqb1, sqb2, fcim and fcem mot switches, all the EQB1 PDUs, the 4 DDS board in DET Eroom and the phasecam1 PC.

I asked to Fiodor to switch off-on again all the Devices that were not responding: g25squeeze4 and SQB2 motswitch

2) I asked to Fiodor to restore the ethernet configuration of all the R&S power supply (6 in total)

3) Once all the hardware was on I did a trend of the main actuators set point: GR M5 and M7, SQB1, FCIM and FCEM MAR_TX and TY, EQB1_HD_M6 and I restored the main set points

4) I managed to close easilly the control loop of FCEM, for FCEM I had to ask to Fiodor to switch on again the SLED for optical lever

5) I had to recenter SQB2 in all the DOFs whereas SQB1 only in vertical

6) Once recovered all the suspended benches I could align the reflection of FC and close the galvo loop. After that I recovered also the FC flashes on FCEM GR PD

7) I managed to close CC PLL

8) For SC PLL I had issues in switching on the PyServer. This was solved restoring the communication using an old interface without the PyServer and once the USB communication was restored I managed to switch on also the PyServer

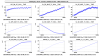

9) Once the CC PLL was locked I asked to Fiodor to realign the MachZehnder in the squeezer. After that I managed also to realign the HD detector and close all the HD loops (CC, CCCoarse and AA) I measured 8.7 dBof ASZ and 5.8 dB of SQZ. With slighly different phases respect to the past 1.15 for ASQZ and 2.6 for SQZ. I had to unclip the beam in retroreflector with 380 steps in V and 600 in H.

Still missing Check alignment of SC into OPA, Lock Main PLL (still beat note not found, will be ckecked tomorrow) and lock the FC on green once MAIN PLL is recovered