After the realignment of the West Arm we tried the lock acquisition. Besides some issue with PSTAB, we unlocked due to the WE violin modes during the transition to LN2, fig.1.

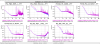

We looked at the notches in the control filter and we noticed some miscentering of the notches wrt the excited modes. The DARM correction was substaining the noise at those frequencies so we sligthly modified the notch filter in order to limit the modes noise reintroduction (see Fig.2 for comparison)

Most likely the modes will naturally damp with their own tau (6 hours ca).

We leave the ITF in CARM null 1f, with the PSTAB loop open.

After the problems encountered few days ago with the Fmoderr process, today we rechecked both calibrations (MC and LNFS). We found out that the mechanical one was a bit underestimated and we corrected it with the new value (8.5e-9 --> 7e-9).

We tested twice the new value and everything seems to work correctly.

During the night we left the PSTAB open and no INJ unlocks were observed.

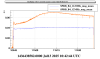

We optimized the alignment of the beam in the AOM before the PMC (which is the actuator for the PSTAB. The beam must be close to the edge, see photo1), looking at the transmission of the PMC while scanning and minimizing the misalignment mode 01. To be noticed that there are strange structures close to the 01 (photo 2). An improvement can be observed up to 10Hz (plot 3).

Another test we would like to perform is to lower the chiller temperature setpoint for the Neovan head from 16° to 15°, but we didn't do it this morning to decouple from the alignment.

Both Sc and Sa crates were restarted and all DSP successfully loaded boot code from the Ethernet.

For ScWEPSDf and ScWEPSDm we had to manually download the DSP code due to a known cfg mismatch. All other DSPs automatically loaded and started correct code.

All DSPs and are now reachable via Ethernet.

ITF found TROUBLESHOOTING mode due to WE fiber connection loss, see previous entries.

At 6:00 UTC started the planned maintenance, below the list of the activities communicated in control room:

- external firm at WE to repair the broken fibers;

- cleaning operations of the experimental areas;

- infrastructures works an the NE; concluded at 10:00 UTC.

- INJ team in LAser Lab from 9:00 UTC to 9:30 UTC;

- periodic tasks performed by the operator:

- TCS chiller refill;

- lock and scan of the OMc; after the measurement the DET MAIN automation node went stuck in a loop;

- check of the thermal camera reference;

- check of TCS powers:

- TCS chiller refill;

| CH [W] | OUTER [W] | INNER[W] | |

| WI | 0.312 | 0.289 | 0.023 |

| NI | 0.66 | 0.5 | 0.048 |

All the maintenance activities concluded at 10:00 UTC; the intervention at WE was still in progress thus I set Troubleshooting mode.

At 11:30 UTC the intervention at WE was concluded and the commissioning crew started to recover the standard condition for all the devices; recovery in progress.

TCS

The WE ring heater has been switched off from 12:35 UTC to 12:38 UTC; operation needed to recover the communication.

After the backbone fibers recovery,

- all the WEB DaqBoxes have been successfully reconfigured

- the WEB cameras readout has been restored

- the SWEB LC and SEB loops have been successfully closed

- the NCAL and PCAL loops have been successfully closed

The failng monomode backbone fibers to West End Building patch panels from Central Building and Control building have been reconnected today ending at 13:30LT

Except for the multimode patches that are on spare fibers, all the other DAQ connections have been patched at the original patch panel postions before the failure.

The DET MAIN automation node was stuck in a loop, because the B1 PD3 offset has drifted and made it think the slow OMC shutter is open while it is actually closed.

Around 09:27 UTC I have updated the B1 PD3 offset by 0.1uW so that the power is zero when there is no beam on the photodiode.

The DET_MAIN node is currently in pause, it should be removed from pose when there is a beam on B1p, for example in NI single bounce.

ITF found with ITF_LOCK, ARMS_LOCK in DOWN and paused, DRMI_LOCK in MISALIGNED_PR_SR and paused with TROUBLESHOOTING mode, due to WE fiber connection loss. Thus no activity planned for this afternoon (calibration with ITF unlocked and ISC injections) can be carried out and night shift will be cancelled.

19:25UTC closed 1500W building entrance 2 (fig.1).

Guard tour (UTC)

17:53 -> 18:28

21:15 ->

ISYS

13:40 - 14:50UTC Measurement of PSTAB TF in LaserLab by Spinicelli to investigate recent INJ unlocks. IMC unlocked at 14:18UTC, recovered at 14:45UTC.

16:28 - 16:47UTC check on IPATSiA by Tringali and Spinicelli. Nothing anomalous to report except 1500W building entrance n.2 (towards WE) found wide open.

IMC unlocked again at 16:44UTC, opened PSTAB loop at 16:56UTC under request by Spinicelli.

In order to debug the recent problem of stability of the PSL/INJ system, we measured the TF of the PSTAB (fig. 1) and the error signal of the PMC loop (fig. 2).

At a first sight, we couldn't spot any strange behaviour of those part, but we will keep investiganting tomorrow morning.

During the night we left the PSTAB open and no INJ unlocks were observed.

We optimized the alignment of the beam in the AOM before the PMC (which is the actuator for the PSTAB. The beam must be close to the edge, see photo1), looking at the transmission of the PMC while scanning and minimizing the misalignment mode 01. To be noticed that there are strange structures close to the 01 (photo 2). An improvement can be observed up to 10Hz (plot 3).

Another test we would like to perform is to lower the chiller temperature setpoint for the Neovan head from 16° to 15°, but we didn't do it this morning to decouple from the alignment.

We have tuned the amplitude sent to the DAC channel for the NEB automated acoustic injections.

The value of the amplitude parameter in the ini.file is Amp= 0.1 (purple curve)

The same should be applied to WEB automated acoustic injections.

We have left the loudspeaker connected to the PDU and ready for automated injections.

VPM process: AcousticInjectionNEB

Configuration parameter: /virgoData/NoiseInjections/MagneticInjectionsO4/EnvSwepts/config/ConfigurationAcousticColoredNEB.ini

other activities carried out:

- check of injection system lock stability (Spinicelli);

- acoustic injections test at NE (Tringali);

The following report has been submitted to the On-call interface.

On-call events -> Electricity

Title: Control network UPS building 3000 West

Author(s): dandrea

| Called at: 01:55, 14-07-2025, by: Menzione |

| Remote intervention: Started: 00:00, 14-07-2025; Ended: |

| On-site intervention: Started: 01:55, 14-07-2025; Ended: 02:55, 14-07-2025 |

| Status: Resolved |

| Operator when issue resolved: Menzione |

Details:

I was called by the operator on duty who informed me that he could no longer connect with the devices in building 3000 West.

I have been asked to intervene for a suspected anomaly on the UPS electrical network.

I intervened on the site and could verify that the UPS of the terminal building were working regularly, then I inspected the interior of the building to check for any interruptions on the secondary distribution but everything was regularly fed.

I deduced that it was a problem related to the data network, so I did an inspection at the network switches of the terminal building and also at the intermediate building West, but everything was in regular operation.

I told the operator that the problem was with the data transmission.

* Note that any files attached to this report are available in the On-call interface.

Monomode fiber reconnection intervention at West End Building is scheduled for tomorrow Tuesday at 8:30 LT

After checking with Alain it turns out that among the monomode fibers only the Lk3 link is working (couple 7-8) while multimode links are working.

Since there are no spare monomode backbone fibers anymore at WEB we are scheduling an urgent intervention by an external firm to reconnect and check at least 24 fibers.

It turned out that other patch panels at WEB were visited by mice affecting the DAQ connections.

At about 10:15 LT I connected also the DAQ fibers from CEB to WEB with spare fibers moving them in this way:

- Multimode PP-WEB to CEB: from 3-4 to 5-6 (note that in this case the PP doesn't show fibers visibily eaten)

- Monomode PP-WEB to CEB:

- from 9-10 to 7-8

- from 21-22 to 15-16

- from 23-24 to 17-18

Since there are no more momomode spare fibers left in the WEB to CEB patch panel the last fiber couple has been rerouted in this way:

Monomode PP-WEB to CEB: from 11-12 to PP-WEB to COB 13-14 then to PP-COB-to-CEB3 13-14

Here I attach the two plots that I refer to in the entry.

The following report has been submitted to the On-call interface.

On-call events -> Interferometer Sensing & Control

Title: CH tuning

Author(s): casanueva, cifaldi

| Called at: 12:45, 13-07-2025, by: None |

| Remote intervention: Started: 12:45, 13-07-2025; Ended: 14:30, 13-07-2025 |

| On-site intervention: Started: ; Ended: |

| Status: Resolved |

| Operator when issue resolved: Montanari |

Details:

Since the situation of the interferometer was so unstable, we decided together with Michal to make a step of the CH already on Sunday. Based on past experience we made a 10% common decrease of the CH. We waited for 1 hour and we tried to relock. The lock acquisition was succesful at the second attempt. FIgure 1 shows the evolution of the DIFFp TY gain, which is significantly better than in the morning.

Also FIgure 2 shows the evolution of the sidebands in the CITF during the CH transient. There is not a significant variation, which is encouraging.

* Note that any files attached to this report are available in the On-call interface.

Here I attach the two plots that I refer to in the entry.

The following report has been submitted to the On-call interface.

On-call events -> Air Conditioning

Title: WEB LPG Boiler down

Author(s): soldani

| Called at: 14:00, 12-07-2025, by: Alarm or monitoring system |

| Remote intervention: Started: ; Ended: |

| On-site intervention: Started: 14:34, 12-07-2025; Ended: 14:59, 12-07-2025 |

| Status: Resolved |

| Operator when issue resolved: Tomelleri |

Details:

Alarm received from DMS; boiler blocked, reporting an electronic control mainboard error;

The alarm was cleared and reset, and the boiler restarted correctly. A technical intervention for Maintenance Service is required for Tuesday, July 15, to check and possibly replace the electronic board.

* Note that any files attached to this report are available in the On-call interface.

The optical fibers between the CEB-DAQroom and the WEB used for the Tolm network do not work, those using the Tolm v1 format for suspensions, as well as those using the Tolm v2 format used by the DAQ boxes

Below the detail of the connections between the MxDx and the patch panel at WE

- Rack_E4-TDB_v1_SN09A - multimode fiber - PP-CEB-03 (10MHz)

- Rack_E4-TDB_v1_SN09A - multimode fiber - PP-CEB-04 (IRIGB)

- Rack_E4-MxDx_v2_SN10-LK1 - monomode fiber - Rack_E4-PP-WEB-RACK-WALL - PP-11_12 - PP-WEB-CEB-11_12

- Rack_E4-MxDx_v2_SN10-LK3 - monomode fiber - Rack_E4-PP-WEB-RACK-WALL - PP-09_10 - PP-WEB-CEB-09_10

- Rack_E2-7slot-VME-MxDx_v1_SN12-LK6 - monomode fiber - PP_WEB-CEB-21-22

- Rack_E2-7slot-VME-MxDx_v1_SN12-LK7 - monomode fiber - PP_WEB-CEB-23-24

ITF found locked in LN3 and in SCIENCE mode.

At 23:33 UTC, no more data for all experimental devices at WE are available, including SUSP, minitower, racks, ENV sensors, RH, Coil driver, MotSwitch, FlipMirror, PCal, ALS and all VAC channels.

I assumed a problem on WE UPS (also not available), so I contacted Electricity expert,

D'Andrea went in WE building so I set TROBLESHOOTING mode set from 00:19 to 01:00 UTC. But after a check, he verified that all Electricity systems were ok. So I contacted OnLine&Computing OnCall. After an investigation from remote, Cortese decided to came on site to check the status of the WE fiber switch. The switch was ok but few fibers were gnawed by mice.

At 01:28 UTC ITF unlocked. WE susp and SWEB loops opened. Not closables.

All servers and all processes for WE was no more reachable. W cavity misaligned. Metatron nodes in Cm error. GAME OVER.

01:33 UTC - TROBLESHOOTING mode set.

After the replacing of fibers, carried out by Cortese, I started to recover the DAQ server lost and, with the help of Masserot, we are recovering trying DAQBoxes. Unfortunately we have still networking communications problems.

Computing and DAQ task forces at work...

Guard tour (UTC):

23:32 - 00:11

01:00 - 01:40

DAQ

(14-07-2025 03:15 - ) Operator on site with expert from remote

Status: On-going

Description: I restarted several servers and recovered several processes but not all. In particular the daq boxes.

Electricity

(14-07-2025 00:00 - 14-07-2025 01:00) On site

Status: Ended

Description: WE UPS check. UPS ok.

On-line Computing & Storage

(14-07-2025 01:00 - 14-07-2025 03:00) On site

Status: Ended

Description: WE fibers gnawed by mice.

Fibers replaced successfully.

The optical fibers between the CEB-DAQroom and the WEB used for the Tolm network do not work, those using the Tolm v1 format for suspensions, as well as those using the Tolm v2 format used by the DAQ boxes

Below the detail of the connections between the MxDx and the patch panel at WE

- Rack_E4-TDB_v1_SN09A - multimode fiber - PP-CEB-03 (10MHz)

- Rack_E4-TDB_v1_SN09A - multimode fiber - PP-CEB-04 (IRIGB)

- Rack_E4-MxDx_v2_SN10-LK1 - monomode fiber - Rack_E4-PP-WEB-RACK-WALL - PP-11_12 - PP-WEB-CEB-11_12

- Rack_E4-MxDx_v2_SN10-LK3 - monomode fiber - Rack_E4-PP-WEB-RACK-WALL - PP-09_10 - PP-WEB-CEB-09_10

- Rack_E2-7slot-VME-MxDx_v1_SN12-LK6 - monomode fiber - PP_WEB-CEB-21-22

- Rack_E2-7slot-VME-MxDx_v1_SN12-LK7 - monomode fiber - PP_WEB-CEB-23-24

It turned out that other patch panels at WEB were visited by mice affecting the DAQ connections.

At about 10:15 LT I connected also the DAQ fibers from CEB to WEB with spare fibers moving them in this way:

- Multimode PP-WEB to CEB: from 3-4 to 5-6 (note that in this case the PP doesn't show fibers visibily eaten)

- Monomode PP-WEB to CEB:

- from 9-10 to 7-8

- from 21-22 to 15-16

- from 23-24 to 17-18

Since there are no more momomode spare fibers left in the WEB to CEB patch panel the last fiber couple has been rerouted in this way:

Monomode PP-WEB to CEB: from 11-12 to PP-WEB to COB 13-14 then to PP-COB-to-CEB3 13-14

After checking with Alain it turns out that among the monomode fibers only the Lk3 link is working (couple 7-8) while multimode links are working.

Since there are no spare monomode backbone fibers anymore at WEB we are scheduling an urgent intervention by an external firm to reconnect and check at least 24 fibers.

Monomode fiber reconnection intervention at West End Building is scheduled for tomorrow Tuesday at 8:30 LT

The failng monomode backbone fibers to West End Building patch panels from Central Building and Control building have been reconnected today ending at 13:30LT

Except for the multimode patches that are on spare fibers, all the other DAQ connections have been patched at the original patch panel postions before the failure.

The following report has been submitted to the On-call interface.

On-call events -> Network

Title: Network backbone link interrupted between COB and WEB buildings

Author(s): cortese

| Called at: 03:00, 14-07-2025, by: Alarm or monitoring system |

| Remote intervention: Started: 03:00, 14-07-2025; Ended: 03:30, 14-07-2025 |

| On-site intervention: Started: 03:50, 14-07-2025; Ended: 05:15, 14-07-2025 |

| Status: Resolved |

| Operator when issue resolved: Menzione |

Details:

Called by Antonella Bozzi after the escalation of the problem was suggesting a networking issue.

The interruption was caused by the failure at 1.30 AM LT of all the 4 monomode fibers connecting network star center switch in COB to West End Building switch.

The failure is due to a mouse eating fibers 1,2,3,4,23,24 directly in the network rack at the north-east corner of the WEB building.

The network backbone connection has been recovered around 4:45 AM LT using spare fibers.

Also the Hartmann Sensor monomode fiber connection was broken and moved to another spare couple

However only one of the two redundant network backbone links turns to be working. This means that one of the spare fibers does not work too.

The redundancy will be recovered on the next tuesday maintenance.

* Note that any files attached to this report are available in the On-call interface.